| 编辑推荐: |

本文主要介绍了无人驾驶汽车系统架构及各个模块得作用,比如说:感知子系统、任务规划子系统、运动规划子系统、车辆控制器,安全模块监控传感器数据等相关介绍。

本文来自于csdn,由火龙果软件Anna编辑、推荐。 |

|

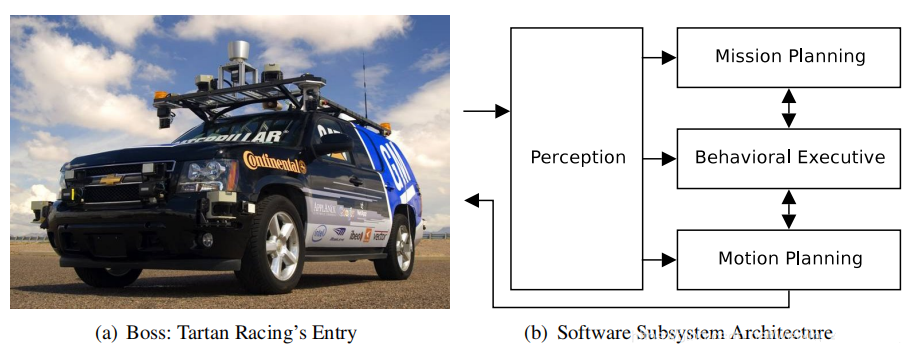

1. Boss(卡内基·梅隆大学)

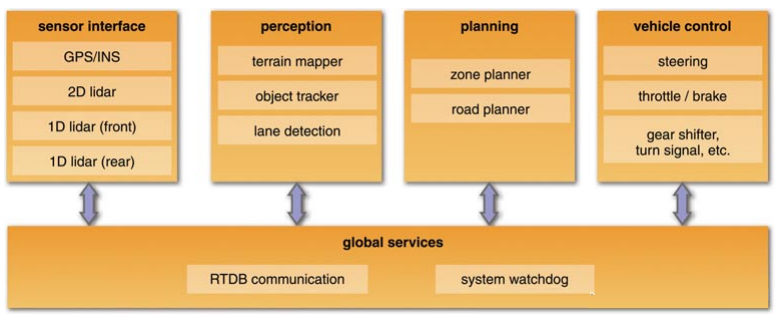

各模块的作用如下:

The Perception subsystem processes sensor data from

the vehicle and produces a collection of semantically-rich

data elements such as the current pose of the robot,

the geometry of the road network, and the location

and nature of various obstacles such as road blockages

and other vehicles.

The Mission Planning subsystem computes the fastest

route to reach the next check-point from all possible

locations within the road network, encoded as an estimated

time-to-goal from each waypoint in the network. This

estimate incorporates knowledge of road blockages,

speed limits, intersection complexity, and the nominal

time required to make special maneuvers such as lane

changes or U-turns.

The Behavioral Executive combines the global route

information provided by the Mission Planner with local

traffic and obstacle information provided by Perception

to select a sequence of incremental goals for the

Motion Planning subsystem to execute.Typical goals

include driving to the end of the current lane or

maneuvering to a particular parking spot, and their

issuance is predicated on conditions such as precedence

at an intersection or the detection of certain anomalous

situations.

The Motion Planning subsystem is responsible for the

safe, timely execution of the incremental goals issued

by the Behavioral Executive. The isolation of goal

selection from goal execution promotes the development

of powerful, highly general planning capabilities,

which fall into two broad contexts: on-road driving

and unstructured driving.A separate path-planning

algorithm is used for each context, and the nature

and capabilities of each planner have a strong influence

on the overall capabilities of the system, including

the nature of common failure scenarios and the options

for attempting recovery maneuvers.

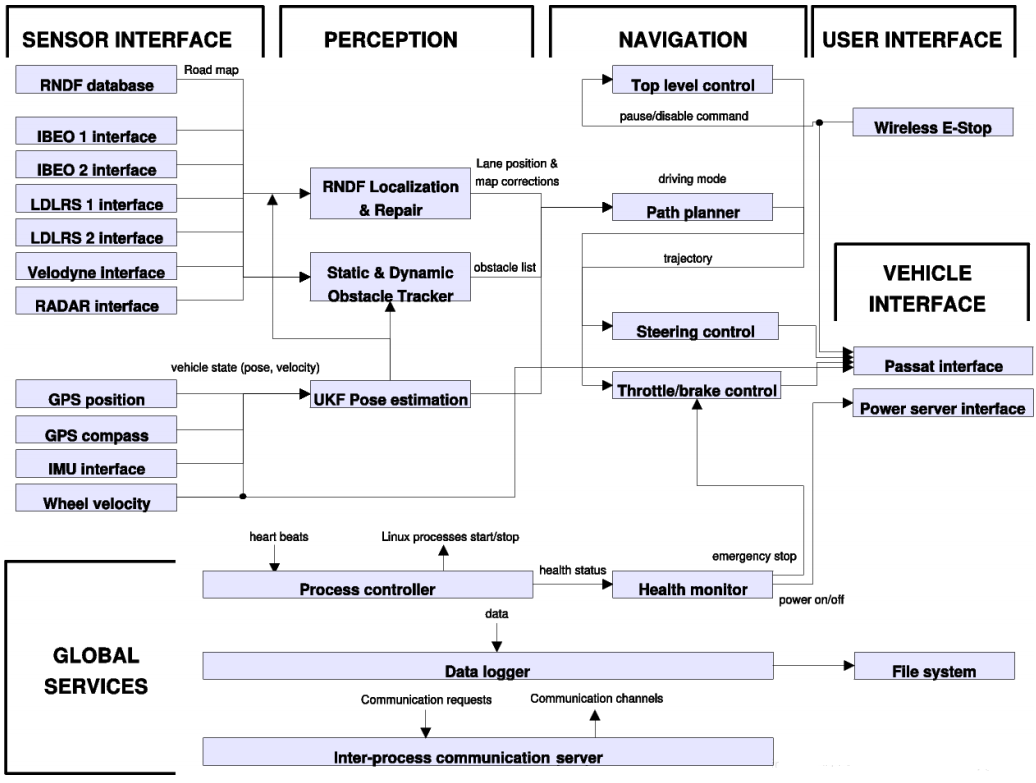

2. Junior(斯坦福大学)

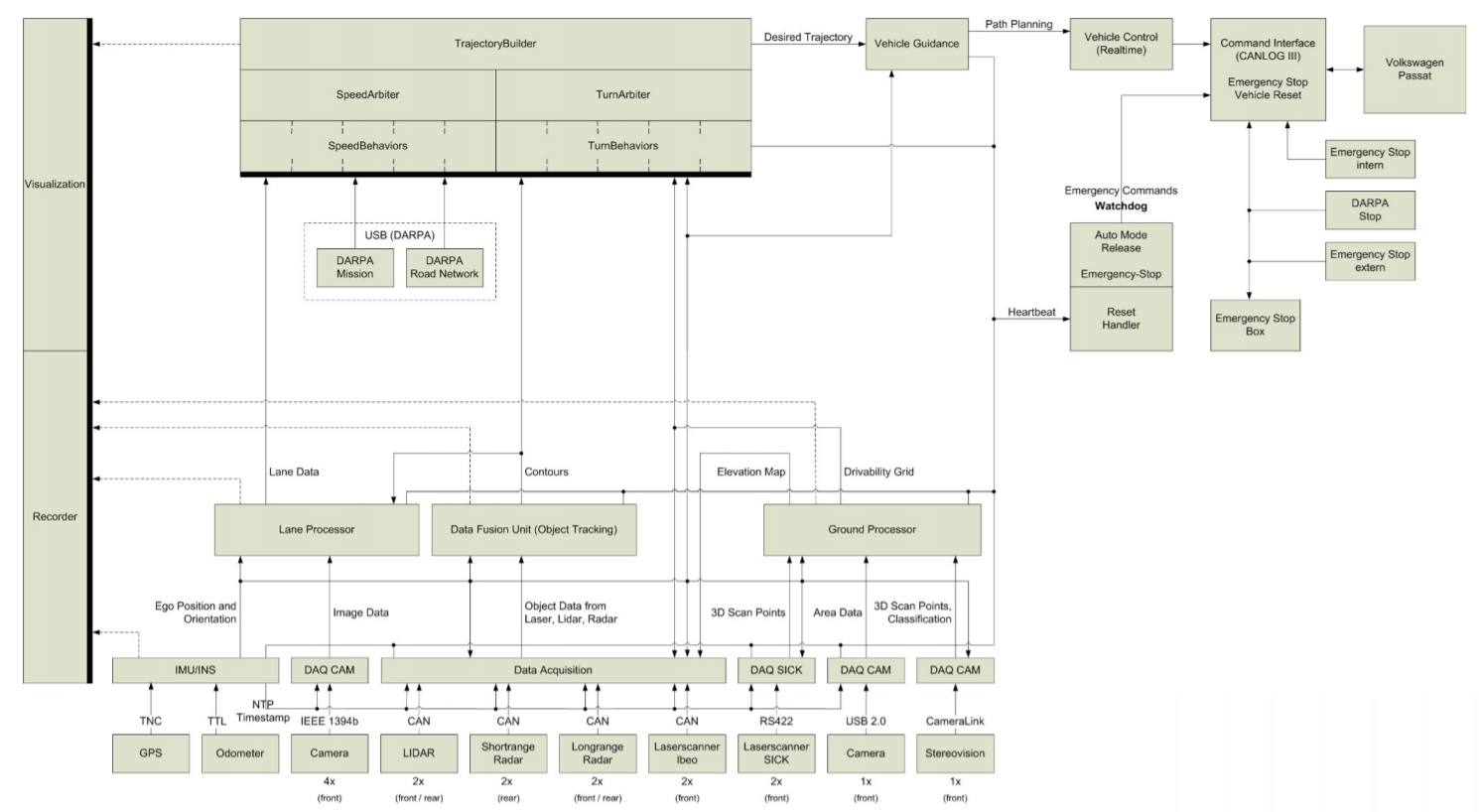

3. Caroline(布伦瑞克工业大学)

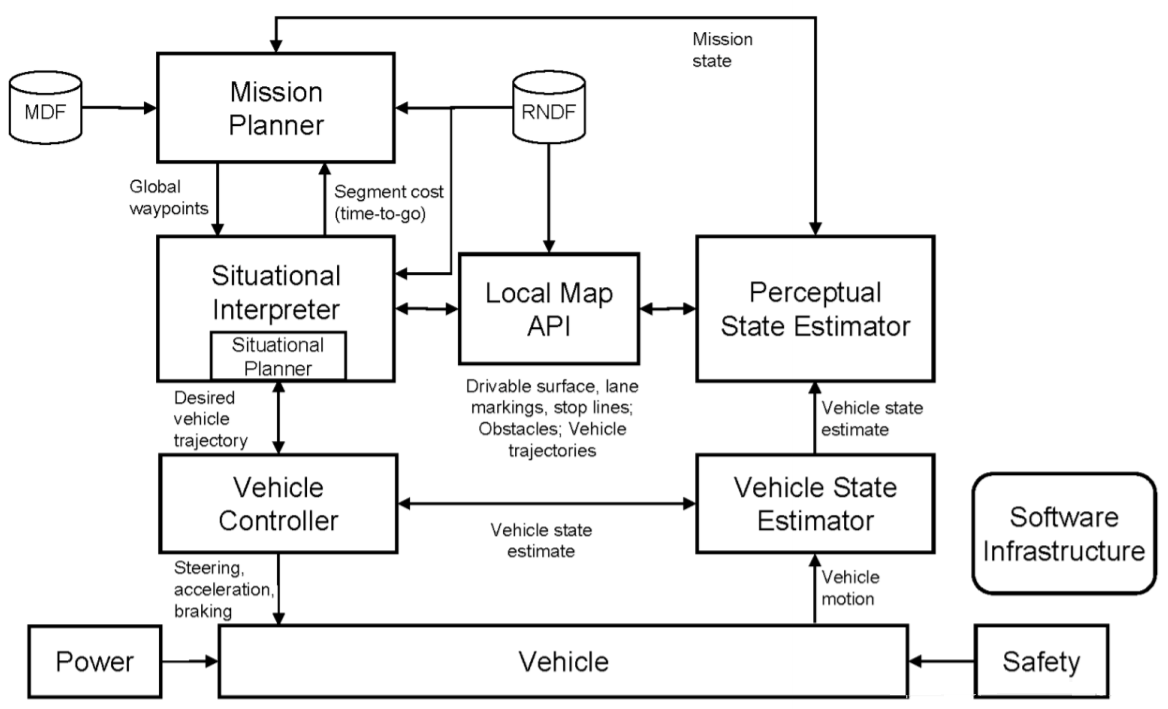

4. Team MIT(麻省理工学院)

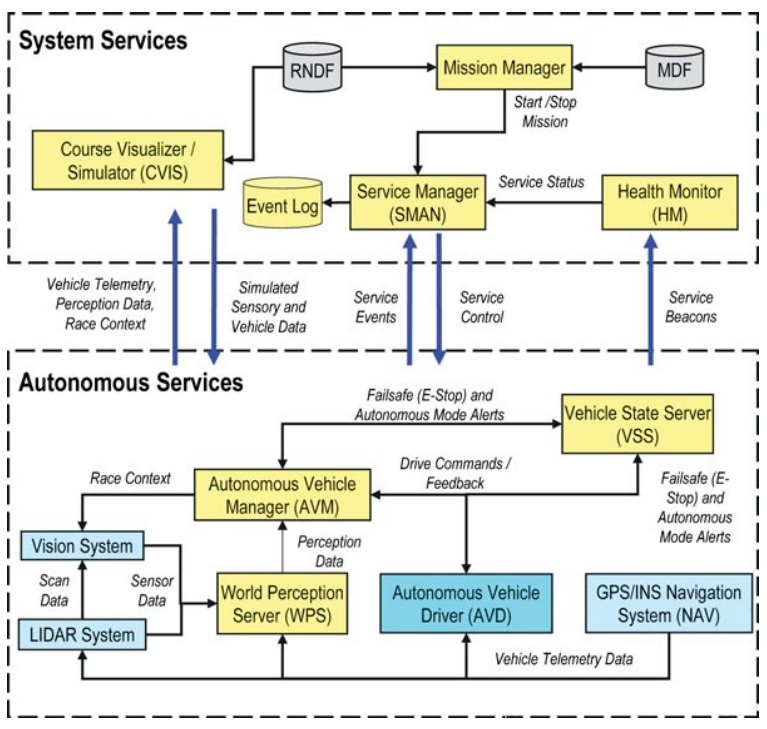

各模块的作用如下:

The Mission Planner tracks the mission state and develops

a high-level plan to accomplish the mission based

on the RNDF and MDF. The output of the robust minimum

time optimization is an ordered list of RNDF waypoints

that are provided to the Situational Interpreter.

In designing this subsystem, our goal has been to

create a resilient planning architecture that ensures

that the autonomous vehicle can respond reasonably

(and make progress) under unexpected conditions that

may occur on the challenge course.

The Perceptual State Estimator comprises algorithms

that, using lidar, radar, vision and navigation sensors,

detect and track cars and other obstacles, delineate

the drivable road surface, detect and track lane markings

and stop lines, and estimate the vehicle’s pose.

The Local Map API provides an efficient interface

to perceptual data, answering queries from the Situational

Interpreter and Situational Planner about the validity

of potential motion paths with respect to detected

obstacles and lane markings.

The Situational Interpreter uses the mission plan

and the situational awareness embedded in the Local

Map API to determine the mode state of the vehicle

and the environment. This information is used to determine

what goal points should be considered by the Situational

Planner and what sets of rules, constraints, and performance/robustness

weights should be applied. The Situational Interpreter

provides inputs to the Mission Planner about any inferred

road blockages or traffic delays, and controls transitions

amongst different system operating modes.

The Situational Planner identifies and optimizes a

kino-dynamically feasible vehicle trajectory that

moves towards the RNDF waypoint selected by the Mission

Planner and Situational Interpreter using the constraints

given by the Situational Interpreter and the situational

awareness embedded in the Local Map API. Uncertainty

in local situational awareness is handled through

rapid replanning and constraint tightening. The Situational

Planner also accounts explicitly for vehicle safety,

even with moving obstacles. The output is a desired

vehicle trajectory, specified as an ordered list of

waypoints (each with position, velocity, and heading)

that are provided to the Vehicle Controller.

The Vehicle Controller uses the inputs from the Perceptual

State Estimator to execute the low-level control necessary

to track the desired paths and velocity profiles issued

by the Situational Planner.

The Safety Module monitors sensor data, overriding

vehicle control as necessary to avoid collisions.

This module addresses safety pervasively through its

interactions with vehicle hardware, firmware, and

software, and careful definition of system operating

modes.

5. Odin(弗吉尼亚理工大学)

6.NaviGATOR(佛罗里达大学)

7. AnnieWAY(卡尔斯鲁厄理工学院)

8. TerraMax(奥什科什国防公司)

8. 《第一本无人驾驶技术书》(刘少山)

9. abiggg的博客

10. 《无人驾驶原理与实践》(申泽邦)

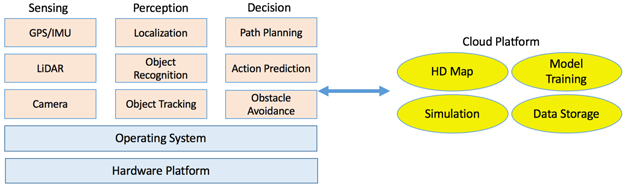

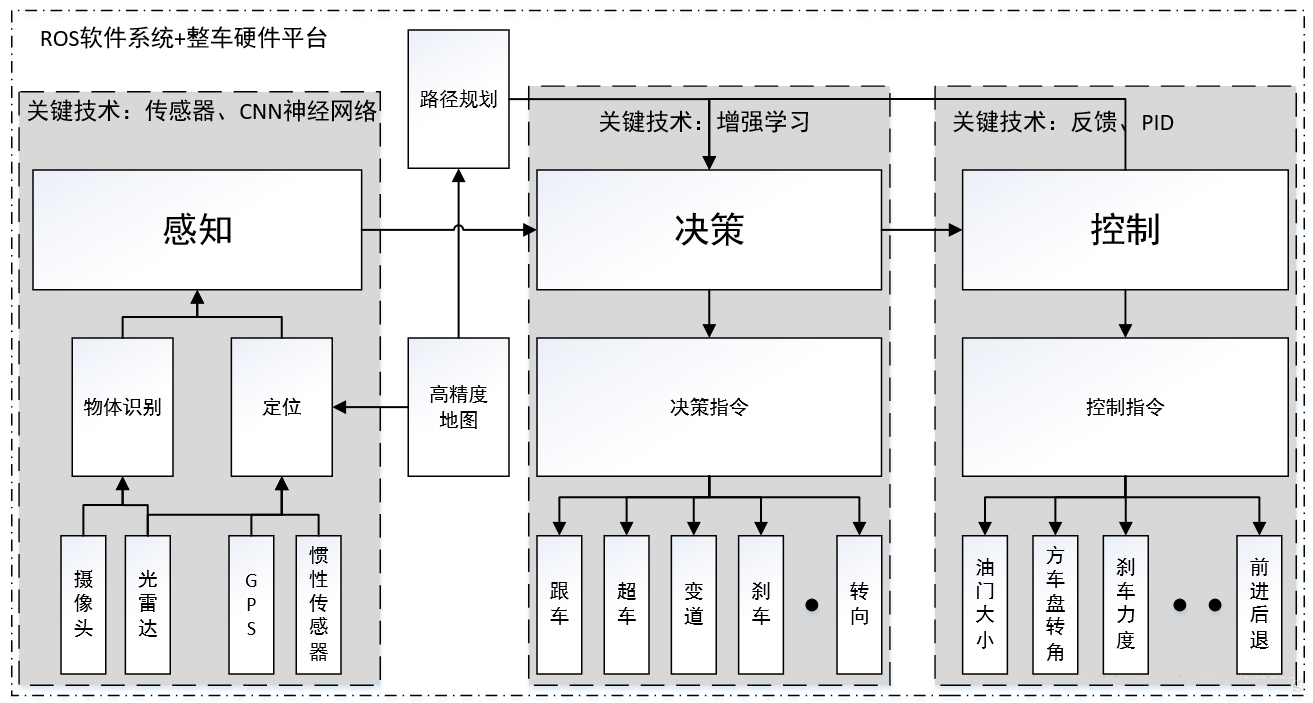

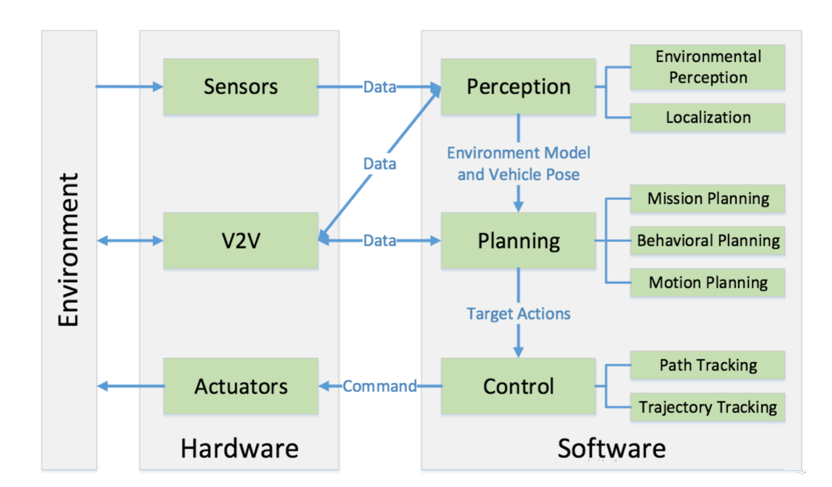

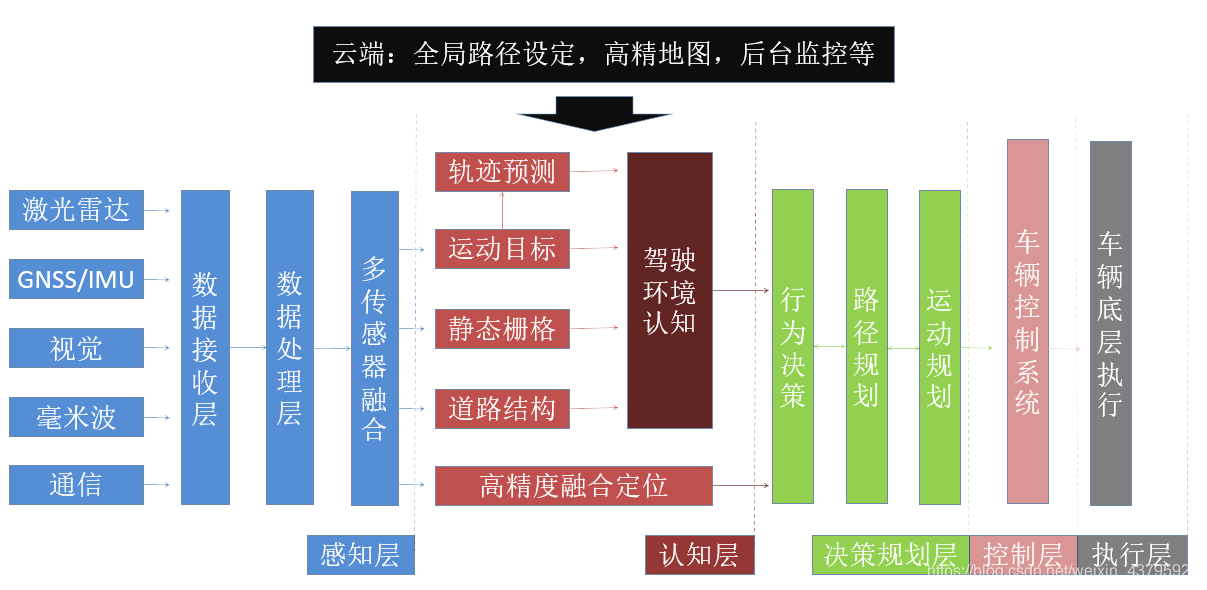

感知是指无人驾驶系统从环境中收集信息并从中提取相关知识的能力。其中,环境感知(Environmental

Perception)特指对于环境的场景理解能力,例如障碍物的位置,道路标志/标记的检测,行人车辆的检测等数据的语义分类。

一般来说,定位(Localization)也是感知的一部分,定位是无人车确定其相对于环境的位置的能力。

规划是无人车为了某一目标而作出一些有目的性的决策的过程,对于无人驾驶车辆而言,这个目标通常是指从出发地到达目的地,同时避免障碍物,并且不断优化驾驶轨迹和行为以保证乘客的安全舒适。规划层通常又被细分为任务规划(Mission

Planning),行为规划(Behavioral Planning)和动作规划(Motion Planning)三层。

最后,控制则是无人车精准地执行规划好的动作的能力,这些动作来源于更高的层。

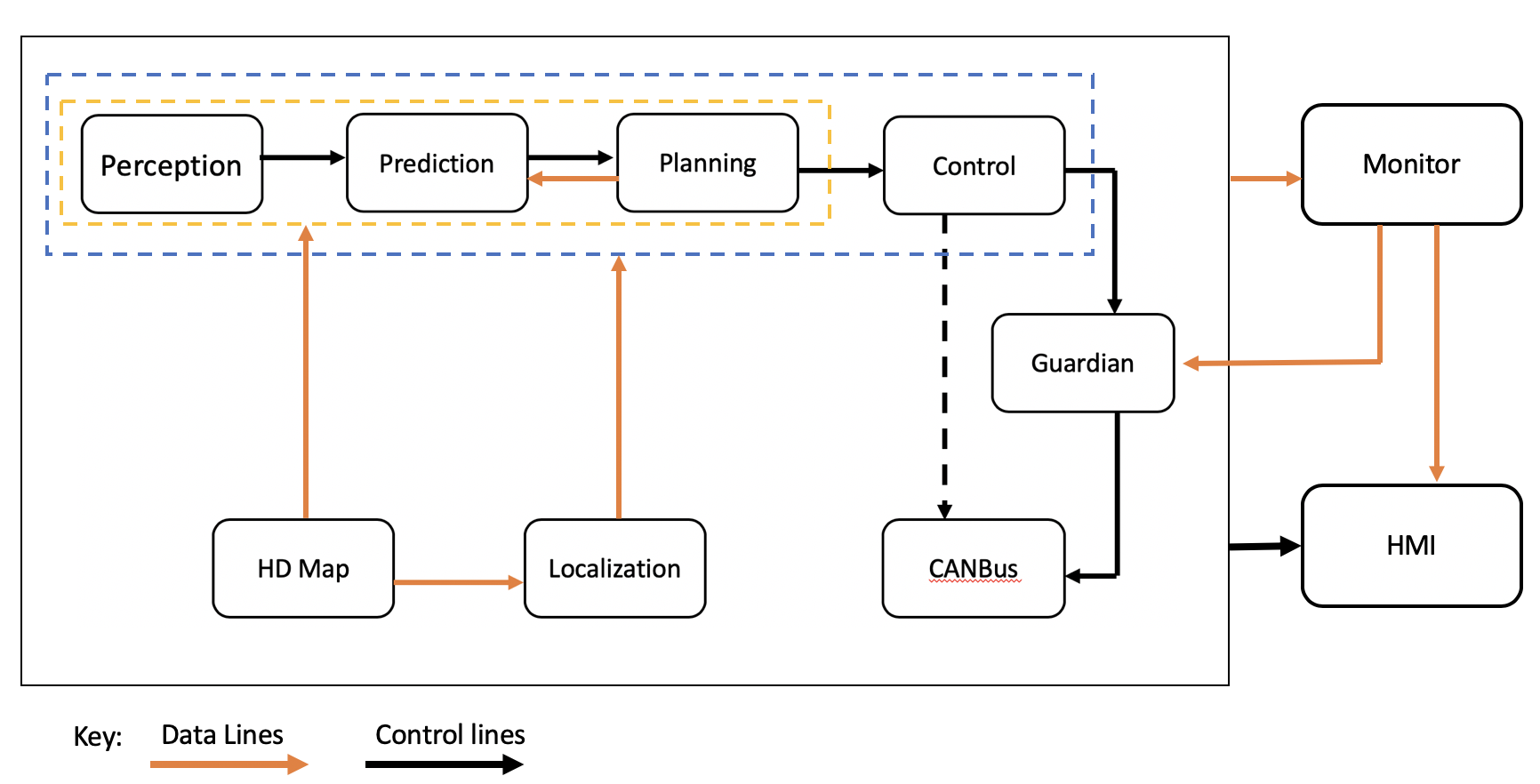

11.Apollo(百度)

实时操作系统(Real Time Operating System):一般是针对自动驾驶定制化的高实时、高并发、低时延的Linux操作系统。

运行时框架(Runtime Framework):基于操作系统层的各算法模块调度框架,主要负责各模块之间的消息通信、资源分配和运行调度等。目前主要的框架有开源的ROS(Robot

Operating System),以及百度自研的Cybertron框架。

高精地图(Map Engine):提供车道线拓扑结构、红绿灯位置、交通标志位置和类型、道路限速等信息服务,供感知、决策规划、定位等模块查询使用。

定位模块(Localization):为各算法模块提供厘米级的高精度定位信息,包括车辆的世界坐标、车辆姿态和朝向等信息。

感知模块(Perception):主要功能为检测车道线标志,识别红绿灯状态,检测跟踪识别车辆、行人,交通标识牌识别等。

规划模块(Planning):主要为基于定位信息、感知信息,结合行驶目的地信息,实时对行驶路线做出规划,为自动驾驶车辆提供行驶轨迹点。

控制模块(Control):基于规划路径,对车辆行驶下发控制命令,主要为转向、油门、制动、灯光、喇叭、车内空调等的控制。

人机交互接口(HMI):主要为乘客或远程控制员,提供与车辆交互的功能,包括规划行驶路径,打开车载娱乐系统,查看车辆行驶状态等。

12. 华测导航

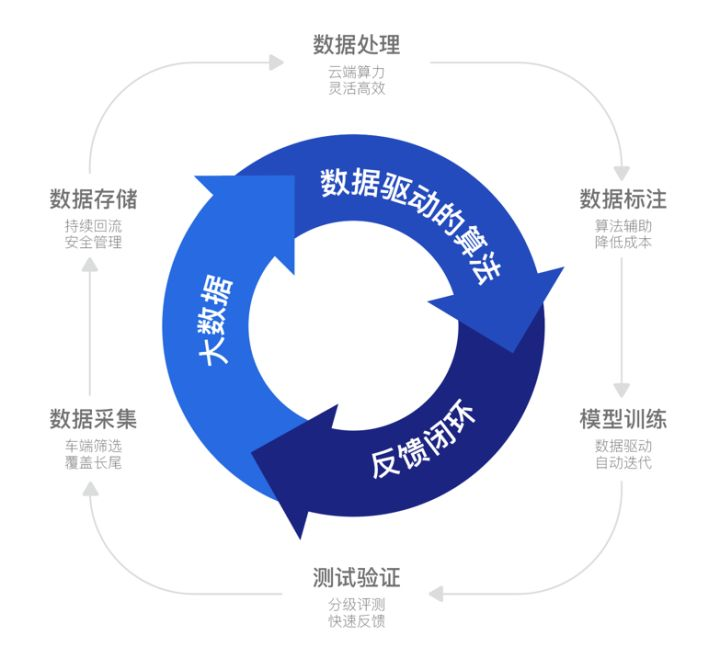

13. Momenta

|